On September 2019 Google will stop supporting noindex directives robots.txt. A simple impression of this update is, Google will not support robot.txt files with noindex directives. And this fact may affect your Google ranking.

Reason for approaching this fact is, to set a high standard of search engine and create a better open source for the future. So, Google will be removing all the codes that read the noindex directive listed within the file on September 1, 2019.

While open-source robot.txt parser library the developer analyzed the usage of the robot.txt rule. They focused on the rule that is unsupported by the internet draft like crawl delay, nofollow, and noindex. Usage of these rules is very low in Google bots also their usage is contradicted by the rules.

These mistakes affect website ranking and were not webmaster intend. So the update that releases on September 1st, 2019 will fix this up.

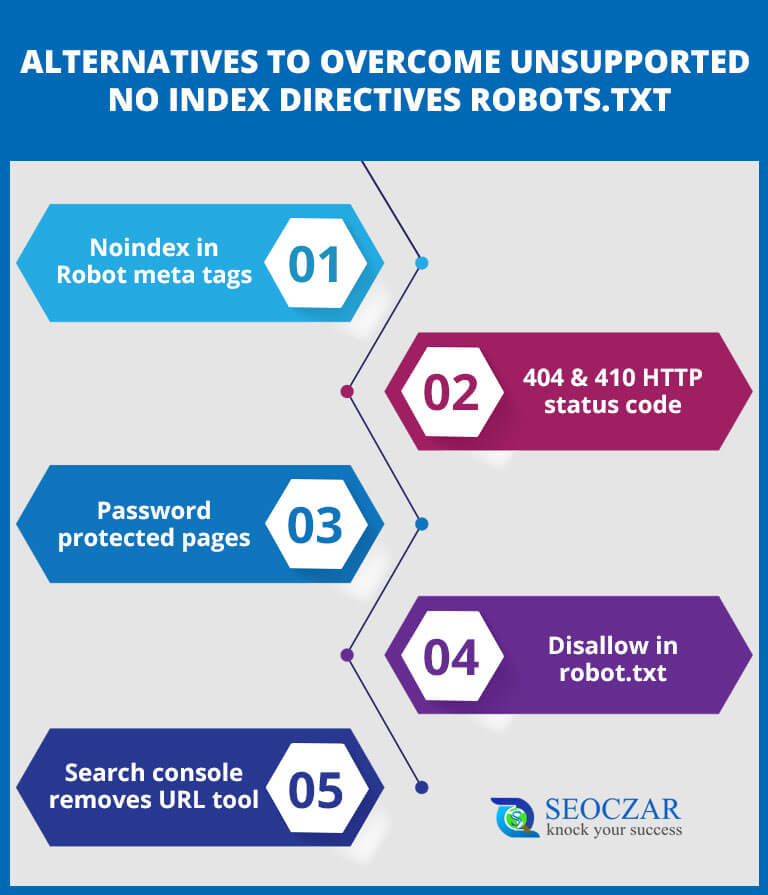

Meanwhile, Google has suggested other alternatives also, to control the crawling of pages.

Alternatives to overcome unsupported no index directives robots.txt

1. Noindex in Robot meta tags

Besides noindex directives robots.txt use noindex in robot meta tags, it supports in both HTTP response header and HTML. Noindex directives in meta tags are the best way to remove web page URLs from the index when crawling is necessary.

2. 404 & 410 HTTP status code

404 and 410 are also known as error codes that visible when a URL doesn’t exist. But here these codes can be utilized to drop such URL for certain pages that already crawled and processed. So if you want to avoid a page to crawl you can add 404 or 410 HTTP status code to that URL.

3. Password protected pages

On a website, there is page those are subscribed and paywalled. Such pages are login password protected. Generally, Google removes such pages from indexing. So this is also and better alternative to overcome with unsupported no index directives robots.txt.

4. Disallow in robot.txt

Search engine crawls only those pages that they know about. Means if you don’t want to a page to crawl you need to hide it from search engine bots. And when you blocking content to be crawl than won’t be indexed in search engine.

However, the search engine may also index URLs based on links from other pages without reading the page content. In this condition aim to make such pages less visible in the future.

5. Search console removes URL tool

One of the easiest ways to remove URL temporarily from Google’s search result is form Google search console remove URL tool. As this is a temporary removal of a webpage from Google so the request will last in 90 days. After that, it reappears in Google result.

Google is really playing smart to make his algorithm more reliable for users. The update that will release in September 2019 will fix the issues going with noindex directives robots.txt and make Google a high standard search engine that will stay for long in future.

For official announcement read Google’s official page

Leave a Comment